▼About

I am a PhD student researching robotics at ETH Zurich in the Soft Robotics Lab and the Robotic Materials Department at the Max Planck Institute for Intelligent Systems in Stuttgart, Germany. I am advised by Dr. Robert Katzschmann and Dr. Christoph Keplinger. I have started my PhD in the summer of 2022, graduating in the summer of 2026 (if all goes well!).

I like making, controlling, and playing with robots. Through my research, I hope to create robotic systems that can act in the chaotic, beautiful everyday environments that we live in, to hopefully make our lives a little bit easier. Currently I research how to automatically generate robotic hand designs that are optimized for dexterous manipulation tasks, so that we can create more capable robotic hands that strike the right balance between simplicity and dexterity.

チューリッヒ工科大学とマックスプランク研究所の博士課程でロボットを研究しています。2022年の夏から始め、2026年の夏に卒業する予定です。

▼CV

updated Jan 2024▼Publications

full list of publications including co-authored papers▼ブログ(日本語)

LATEST POSTS

- IPv6だとSoftEther/SSTPでVPNを経由して接続してくれなかった問題への対処 -2024年04月13日

- 【ライブレポート】Ado 世界ツアー「Wish」ヨーロッパ最終公演(ドイツ・デュッセルドルフ)に行ってきた -2024年03月17日

- 日本の未来は明るい、気がする -2024年03月03日

- すべての記事…

▼Blog(English)

A subset of my Japanese blog, translated into English▼Projects

Real World Robotics- simulation and RL environment setup

A hands-on project class at ETH Zurich to design, build, and control real robotic hands

Sep 2023 ~ Dec 2023

Sep 2023 ~ Dec 2023

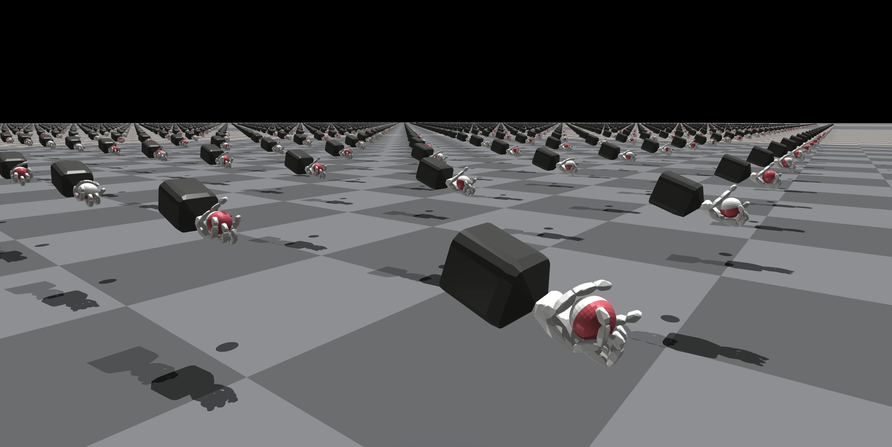

Real World Robotics is a intensive course provided to master’s students at ETH Zurich, in which teams of students build an articulated robotic hand themselves and program a robust controller with reinforcement learning. I have contributed to this course by introducing to the students the faive_gym framework that I have developed together with Benedek Forrai, which lets users easily import their own robot hand into IsaacGym to train for dexterous tasks. I have also provided a video tutorial to the faive_gym framework (embedded below), demonstrating how to use it with the Faive Hand model, customize the environment, and finally load custom robots into the scene.

If you are interested in the course itself, all the lectures can be seen on the lab’s YouTube channel and on the course website, please have a look!

Dexterous Manipulation Research at the Soft Robotics Lab, ETH Zurich

applying reinforcement learning to the Faive Hand for dexterous ball manipulation

Oct 2022 ~ Sept 2023

Oct 2022 ~ Sept 2023

I have set up a GPU-based simulation environment for the Faive Hand, a biomimetic dexterous robot hand developed at the Soft Robotics Lab. By training thousands of simulated robot hands in parallel, a policy to rotate a sphere in-hand could be learned in about one hour. I implemented the Faive Hand MJCF model and created an RL environment to work with the open-source IsaacGymEnvs RL framework, and restructured the framework to make it easier to load the model to new robot hand models or tasks, releasing it as the faive_gym open source library, which was also used in the Real World Robotics interactive course.

Publications

- Yasunori Toshimitsu Benedek Forrai, Barnabas Gavin Cangan, Ulrich Steger, Manuel Knecht, Stefan Weirich, Robert K. Katzschmann Getting the Ball Rolling: Learning a Dexterous Policy for a Biomimetic Tendon-Driven Hand with Rolling Contact Joints, 2023 IEEE-RAS International Conference on Humanoid Robots (Humanoids 2023), Austin, Texas, USA, 2023

Biomimetic Musculoskeletal Humanoid Robots

Research in biomimetic control of tendon-driven musculoskeletal humanoids

Apr 2019 ~ Mar 2022

Apr 2019 ~ Mar 2022

I researched the control of musculoskeletal robots at the JSK robotics lab in UTokyo.

These robots mimic the musculoskeletal structure of the human body, and are hoped to generate more natural movements than conventional axis-driven robots.

However, the control of such robots is not straightfoward, and an infinite set of commands can be proposed even for simple reaching movements with the arm. My current research topic is to control these robots by applying principles discovered in neuroscience. Since the hardware of these robots are designed based on the human body, I hope that a similar approach to their software will yield effective motions.

Publications

- Y.Toshimitsu, K. Kawaharazuka, A. Miki, K. Okada, M. Inaba,

DIJE: Dense Image Jacobian Estimation for Robust Robotic Self-Recognition and Visual Servoing, in 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS2022) - Y. Toshimitsu, K. Kawaharazuka, M. Nishiura, Y. Koga, Y. Omura, Y. Asano, K. Okada, K. Kawasaki, and M. Inaba,

Biomimetic Operational Space Control for Musculoskeletal Humanoid Optimizing Across Muscle Activation and Joint Nullspace, in 2021 International Conference on Robotics and Automation (ICRA 2021), presentation video - Y. Toshimitsu, K. Kawaharazuka, K. Tsuzuki, M. Onitsuka, M. Nishiura, Y. Koga, Y. Omura, M. Tomita, Y. Asano, K. Okada, K. Kawasaki, and M. Inaba,

Biomimetic Control Scheme for Musculoskeletal Humanoids Based on Motor Directional Tuning in the Brain, in Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS2020) - 利光 泰徳, 河原塚 健人, 都築 敬, 鬼塚 盛宇, 西浦 学, 古賀 悠矢, 大村 柚介, 冨田 幹, 浅野 悠紀, 岡田 慧, 川崎 宏治, 稲葉 雅幸

Motor Directional Tuning現象に基づく筋張力制御による筋骨格ヒューマノイドの上肢動作, in 日本機械学会ロボティクス・メカトロニクス講演会’20 講演論文集 (ROBOMECH20J), 1P1-G05, 2020, VIDEO

Research Visit at the Soft Robotics Lab, ETH Zurich

Design, fabrication and modeling of an integrated design for a soft continuum proprioceptive arm (SoPrA)

Dec 2020 ~ May 2021

Dec 2020 ~ May 2021

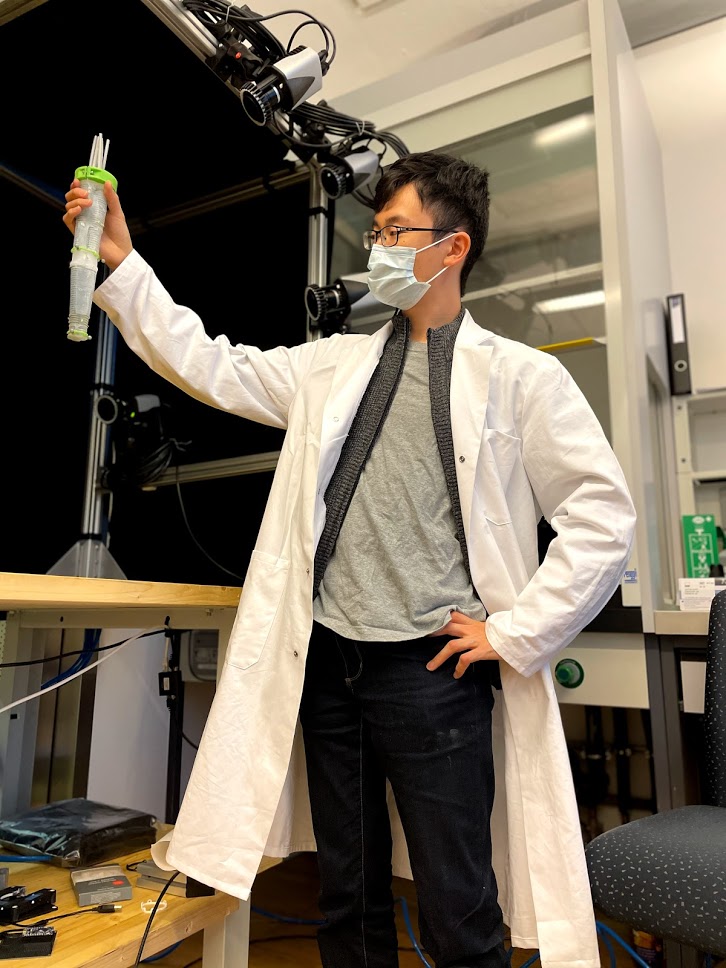

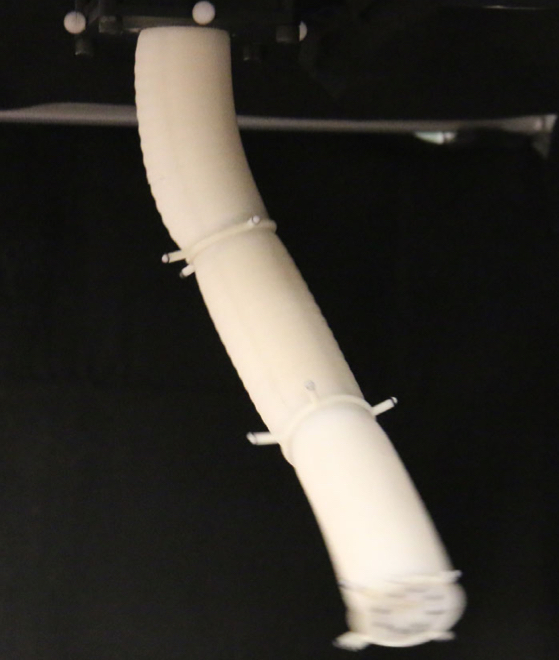

This was a 5 1/2-month research visit during my Master’s program (which was at UTokyo) at the new Soft Robotics Lab conducted under the guidance of Prof. Dr. Robert Katzschmann, in which I have created a new design for a soft pneumatic continuum arm that uses fiber reinforcement to restrict radial expansion, contains internal proprioceptive sensors to measure the bending state of the robot, and developed an associated model that can describe the dynamic behavior of the soft robot.

Publications

- Yasunori Toshimitsu Ki Wan Wong, Thomas Buchner, Robert Katzschmann SoPrA: Fabrication & Dynamical Modeling of a Scalable Soft Continuum Robotic Arm with Integrated Proprioceptive Sensing, 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2021), Prague, Czech Republic, 2021

Internship at Connected Robotics

Lead developer for the original version of the ice cream robot

Feb 2018 ~ Aug 2018

Feb 2018 ~ Aug 2018

- Connected Robotics

- Article on Wantedly.com in Japanese(1/3, 2/3, 3/3)

- my blog post, in Japanese

- 日経ビジネス:セブン&アイ・フードが導入、調理ロボットは最低賃金で働く(Japanese article)

Connected Robotics is a company in Tokyo that aims to revolutionize cooking through the use of robots. During my first internship there from February to August, I was assigned to start development of a new robot that can make soft serve ice cream.

The robot is designed not just to serve ice cream, but also to entertain customers as well- that is why it is designed as its own character. It also has a wide-angle camera that can detect customers and interact with them.

In its hand is a weight sensor (load cell), which can measure the weight on the hand. The data from this is used to dynamically adjust the speed of the spiraling movement when it is serving ice cream. This enables the robot to serve about the same amount of ice cream each time, regardless of the flow rate.

After 5 months of development, the robot was deployed to Huis Ten Bosch, a theme park in Nagasaki, Japan. I still occasionaly intern at Connected Robotics, and continue developing the robot. The video below is the newest iteration, with improved hardware, motion control, and software architecture. It can be seen in Makuhari, in the shopping center Ito Yokado.

ソフトクリームロボットの開発経緯についての講演

Oct 2019

Oct 2019

2019年10月31日、秋葉原UDXにて開催されたTechShare株式会社、DOBOT社 主催『DOBOT User Conference 2019 ~新分野に広がる小型アーム・協働ロボットによる自動化~』にて、ソフトクリームロボットの開発担当として講演を行わせていただきました。

Ionobot

An autonomous oceanic surface vehicle for ionospheric measurements

Sep 2018 ~ Dec 2018

Sep 2018 ~ Dec 2018

- my blog post about it, in Japanese

The Ionobot was developed in collaboration with the MIT Lincoln Lab, as part of the 2.013 Engineering Systems Design capstone class. Fluctuations in the ionization level of the earth’s upper atmosphere lead to inaccuracies in GPS and radio signals. In this project, we have developed “Ionobot”, an autonomous surface vehicle that acts as an ocean platform for ionospheric measurement to take measurements not possible by existing ground-based stations. The boat must autonomously navigate to its designated location of measurement, and remain there for up to 6 weeks at a time under its own power.

In this project I have worked as the manager of the power supply system team. We created the required specifications through repeated discussions and negotiations with other teams (especially for solar panel size and battery weight), designed a system that met the needs for the required power output and oceanic / climate conditions, and evaluated the performance of the solar panels through experiments.

Soft Robotics Research at MIT CSAIL

Development of C++ program for dynamic control of pneumatic soft robot

Sep 2018 ~ Jan 2019

Sep 2018 ~ Jan 2019

- my blog post about the experience, in Japanese

During my exchange program at MIT, I joined the Distributed Robotics Laboratory as an UROP student and conducted experiments on a soft pneumatic arm made of silicone. My contributions in the project included writing most of the C++ software that implements the proposed dynamic controller for the arm, fabricating the soft arm by casting silicone, and also proposing new parametrizations for describing the configuration of the arm.

Publications

-

R. K. Katzschmann, C. D. Santina, Y. Toshimitsu, A. Bicchi and D. Rus,

Dynamic Motion Control of Multi-Segment Soft Robots Using Piecewise Constant Curvature Matched with an Augmented Rigid Body Model, 2019 2nd IEEE International Conference on Soft Robotics (RoboSoft), Seoul, Korea (South), 2019

Laser-cut Pino Gacha

Dec 2019- 森永乳業公式サイト:ピノガチャ (Japanese page)

not affiliated with Morinaga

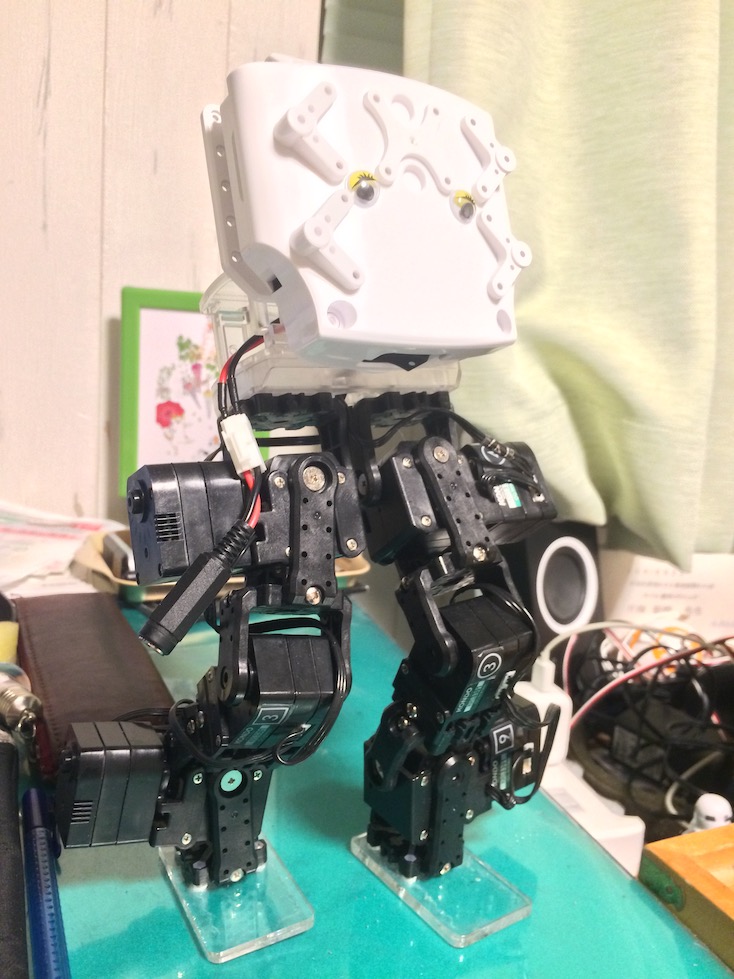

Mini Walker Project

small leg robot that kind of walks

Feb. 2018 ~ Apr. 2018

Feb. 2018 ~ Apr. 2018

Watching the video 人間のような自然な歩き方をするロボット(Biped robot walks just like a human being.) inspired me to create one of my own walking mini robot. I decided to first read up on past research about bipedal walking algorithms, and found countless walking gaits. They range from simple (interpolating between known statically stable poses to create motion) to I-have-no-idea-how-it-works complex(sing convex optimization to plan dynamic motion trajectories for the Atlas robot).

Here, I implemented a gait based on the LIPM(Linear Inverted Pendulum Mode). It’s very lightly described in the video, but please refer to the original paper if you’re interested.

- Kajita, Shuuji & Kanehiro, Fumio & KANEKO, Kenji & Yokoi, Kazuhito & Hirukawa, Hirohisa. (2001). The 3D linear inverted pendulum model: A simple modeling for a biped walking pattern generation. 1. 239 - 246 vol.1. 10.1109/IROS.2001.973365.

- Garton H., Bugmann G., Culverhouse P., Roberts S., Simpson C., Santana A. (2014) Humanoid Robot Gait Generator: Foot Steps Calculation for Trajectory Following. In: Mistry M., Leonardis A., Witkowski M., Melhuish C. (eds) Advances in Autonomous Robotics Systems. TAROS 2014. Lecture Notes in Computer Science, vol 8717. Springer, Cham

Mini Mobile Manipulator

versatile armed robot

Dec. 2017 ~ May 2018

Dec. 2017 ~ May 2018

The robot was originally created as the end-of-year project for 3rd year students at UTokyo(Dec. 2017 ~ Jan. 2018), and I have later expanded its capabilities so it can be controlled through LINE, a popular messaging platform in Japan. It runs ROS, and I have also made it compatible with the motion planning library MoveIt!, so it can be moved to avoid collisions with itself or other obstacles. The body was designed in Fusion 360 and made with laser-cut MDF, and the robot arm is made by Kondo Kagaku.

As part of the May Festival exhibits at UTokyo, I have added the LINE messaging feature. To avoid users controlling the robot from a distance, a verification system using QR codes was created. Please see my newer video (in Japanese) for a demo video.

Runner's High

Winner at 2018 Bose Challenge hackathon @MIT

Oct. 2018

Oct. 2018

Team members

- Dominic Co: user experience designer

- Jooyeon Lee: MIT HCI researcher

- me: software engineer

Real-time audio pace feedback with Bose AR

Lover Duck

A connected rubber duck of the future!!

Oct. 2017

Oct. 2017

- GitHub repo

- Inverview article by NS Solutions 新日鉄住金ソリューションズ

Presented at JPHacks 2017, a student hackathon. It was a five-person team comprised of students from the University of Tokyo.

Designed to prevent drowning accidents in the bathtub. The Duck has an accelerometer built inside, and its data is sent wirelessly to a host PC. When it is detected that the human is drowning/passed out, it sends an alert to the server, which can update the Web interface as well as call family members on the phone.

I had great fun in contributing to this as the hardware engineer(the innards of the duck), and witnessing my talented teammates in action.

accolades

- Innovator recognition

- sponsor awards

- IBM

- NS Solutions

- FEZ

ARDUroid

Android-controlled reptilian-ish robot

Dec. 2015 ~ Jan. 2016

Dec. 2015 ~ Jan. 2016

This robot interfaces an Android device with an Arduino, so the robot can utilize the processing power, the display, the internet connectivity etc. of a smartphone. I developed this as the final project for an Android app developing course.

Falcon Heavy footage mixed with music from Apollo 13

Feb. 2018Just a random idea I had. Made it in just a few hours, but I’m quite proud of how it ended up.

LiS ONE

A working educational model of a space probe

June 2017

June 2017

Presented at FEEL 2017, an educational space event held in Sagamihara. Created by 4 members(including me) of the space science communication organization, Live in SPACE Project.

This “space probe” has multiple sensors inside(an accelerometer, gyroscope, temperature sensor and light sensor), communicates with the mission control(a PC) wirelessly and works on a battery. This lets the children hold and play around with the probe, guessing the roles of each sensor and learning about space exploration in the process. We also had a panel describing actual space missions, and the data that they collected by using the same types of sensors as found on LiS ONE.

Video Chart

summarize a video into a beautiful "map"

Feb. 2017

Feb. 2017

Presented at the 2017 Yahoo! Japan Hackday hackathon, as a two-person team. An application written in Python that takes a video(.mp4) and subtitle file(.srt), and outputs an interactive summary of its contents.

Virtual Window

Turns your screen into a "window"- without any special hardware

Sep. ~ Oct. 2017

Sep. ~ Oct. 2017

video coming soon, I hope…

TAL 9000

HTML, JavaScript & CSS playground

circa 2013??

circa 2013??

A stupid website that I made a long time ago. It’s mostly in Japanese.